Difference between revisions of "W1021 Computer History"

Tatsat-jha (talk | contribs) (→The Modern Concept: add markup) |

Jeff-strong (talk | contribs) m (→The Modern Concept: verified editorial pending review) |

||

| (13 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

== Introduction == | == Introduction == | ||

Computers have undergone a long and rich history with several variations and developments throughout time. | Computers have undergone a long and rich history with several variations and developments throughout time. As a developer, it's important to understand this history as computational devices continue to grow and evolve. This experience provides a cursory view of how modern computers were developed and a glimpse into the future of computation. | ||

== Origins == | |||

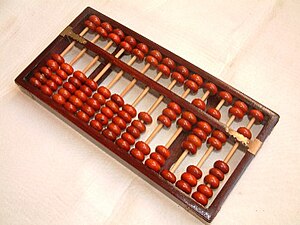

Many analog devices were created in early human history from a need to keep track of growing numbers of people and property. The '''Abacus''' is an example of such a device. It was used as a computational aid for the people who operated them, known as computers. A standard | Many analog devices were created in early human history from a need to keep track of growing numbers of people and property. The '''Abacus''' is an example of such a device. [[File:Boulier1.JPG|thumb|link=|An abacus]] It was used as a computational aid for the people who operated them, known as computers. A standard abacus has four rungs with nine beads on each rung. The rungs represented 1s, 10s, 100s, and 1000s. This allowed one to easily perform basic mathematical calculations and keep track of growing early civilization. | ||

In fact, as human civilization expanded, | In fact, as human civilization expanded, several technological advancements were made to aid exploration and computation. For example, the '''Astrolabe''' was an early computational device used in sea navigation through basic astronomical computation. Several of these sorts of early machines were created to perform growingly more complex calculations, tracking time, position, etc. | ||

Military | Military applications were most common for calculations given the dozen variables needed to accurately shoot artillery. In the past, hand-operated calculating machines would be used to pre-calculate tables of values with several variables<ref name="Precomp">[https://en.wikipedia.org/wiki/Precomputation Precomputation-Wiki]</ref>. These tables would then be used for a specific application. The issue with this was the room for error with pre-computed tables and the specificity they required: a new cannon required a different set of pre-computed values. | ||

[[File:CharlesBabbage.jpg|thumb|center|link=|200px|Charles Babbage]] | |||

This led to the conceptual inventions of '''Charles Babbage''', often deemed the father of computation<ref name="Babbage">[https://www.britannica.com/biography/Charles-Babbage Charles-Babbage Britannica]</ref>. Specifically, he thought of two machines: '''The Difference Engine''' and '''The Analytical Engine'''. | |||

[[File:Babbage difference engine drawing.gif|thumb|right|link=|Difference engine (a small portion)-drawing]] | |||

The Difference Engine was meant to compute mathematical tables via polynomial functions<ref name="Babbage">[https://www.britannica.com/biography/Charles-Babbage Charles-Babbage Britannica]</ref>. It would have been a significantly improved process over the hand devices used by human computers to formulate the many look-up tables that had to be created. The polynomial basis of the Difference Engine was that whichever functions were needed by scientists and navigators could be approximated with polynomial functions. | |||

After this, Babbage had the idea for the Analytical Engine, meant to be a general computational device that could receive input from punch cards and provide output through a printer, bells, and curve plotters<ref name="Babbage">[https://www.britannica.com/biography/Charles-Babbage Charles-Babbage Britannica]</ref>. Neither engine was completed during Babbage's lifetime but would provide the basis for computation in the future. | |||

[[File:Babbages Analytical Engine, 1834-1871. (9660574685).jpg|thumb|left|link=|Babbage's Analytical Engine (a small portion)]] | |||

<br clear='all'/> | |||

== The Modern Concept == | |||

Around the turn of the 20th century, America had a significant issue. The booming population made computing census data next to impossible. It would take so long, in fact, that by the time the data was computed, it would already be outdated. German-American statistician and inventor '''Herman Hollerith'''<ref name="Herman">[https://en.wikipedia.org/wiki/Herman_Hollerith Herman Hollerith Wiki]</ref> would solve this by inventing an electromechanical tabulating machine—'''The Punch Card Tabulator'''<ref name="IBM">[https://www.ibm.com/ibm/history/ibm100/us/en/icons/tabulator/ IBM]</ref>—which could keep track of the massively growing population with punch cards. This invention came from several works using the punch card as the basis for input such as Babbage's Difference Engine<ref name="IBM">[https://www.ibm.com/ibm/history/ibm100/us/en/icons/tabulator/ IBM]</ref>. | |||

The US Census saved millions using these machines and reduced the time to compute to years from decades in the 1890 Census. Herman Hollerith used his invention to create the Computing Tabulating Record Company (CTR), which was renamed to the International Business Machines Corporation, famously known as '''IBM'''.<ref>[https://www.computerhistory.org/brochures/g-i/international-business-machines-corporation-ibm/ IBM-Computer History]</ref> | |||

From here, computers evolved greatly and perhaps most notably with the famous mathematician '''Alan Turing'''. Turing is known for the concept of '''Turing Completeness''' and the '''Turing Machine''', which was a mathematical model of an abstract machine that would operate by manipulating symbols on a strip of tape (The BrainF*ck Programming Language is very similar to the original Turing Machine). Alan Turing created the Turing Machine during his work on the '''Entscheidungsproblem''', saying that the Turing Machine could compute any mathematical algorithm it was given<ref name="Turing">[https://www.britannica.com/biography/Alan-Turing Alan-Turing Britannica]</ref>. | |||

= | [[File:Alan Turing Aged 16.jpg|thumb|200px|link=|Alan Turing (age 16)]] | ||

This would spawn the basis of programming languages and the criterion of whether a language is Turing-Complete, essentially meaning if it could do everything a Turing Machine could. | |||

This concept can get very complex very quickly but just know that Quantum Computers are using laws of | The first full programmable computer was created by a German engineer, '''Konrad Zuse'''. The major concept of his machine, called the Z3, used a binary system instead of the decimal system, which was used since the time of the abacus. This came from the fact that electrical wires are best represented as two values: on or off, true or false, 0 or 1. Several electrical wires could be arranged and used together to create different types of '''logic gates''' and represent all sorts of control flow, memory allocation, and be truly programmable. | ||

[[File:Konrad Zuse (1992).jpg|thumb|200px|left|link=|Konrad Zuse (1992)]] | |||

<br clear='all'/> | |||

== A Look into the Future == | |||

Computers have come a long way from the human-driven calculations and even from the Z3. Semiconductors and microchip technology allow computational speeds that could only be dreamed of in the past. However, it seems the next evolution of computers will come in the form of '''Quantum Computing'''. | |||

This concept can get very complex very quickly but just know that Quantum Computers are using laws of quantum mechanics such as superposition and entanglement to perform computations that traditional computers cannot. This sector is rapidly emerging and certainly one that developers should watch in the years to come. | |||

Overall, the major trends in computational history come from a need to keep track of people and property as well as being able to quickly compute progressively more involved, in-depth, and challenging computational problems. From this, it's easy to understand how the applications of computers have grown and evolved over time as well as how Computer Science is so entwined with the fields of electrical engineering, mathematics, and now quantum mechanics. | |||

== Key Concepts == | == Key Concepts == | ||

{{KeyConcepts| | |||

* '''Origins''' | |||

** Growing civilization required that people (computers) use computational aids such as an abacus to keep track of people and property. | |||

** Human computers would make precomputed tables that would be released in manuals for use in the military and other fields. | |||

** Charles Babbage thought up the concept of the Difference Engine and Analytical Engine to compute tabulate polynomial functions more effectively. | |||

* '''The Modern Concept''' | |||

** During the turn of the 20th century, the US Census found it nearly impossible to compute the millions of people living in the United States. | |||

** Herman Hollerith created the Punch Card Tabulator using Babbage's concept with electrical machinery and punch cards for input. He then created the Computing Tabulating Record Company, which then became IBM. | |||

** Alan Turing created the concept of the Turing Machine, which was an abstract machine that manipulated symbols on a strip of tape. This simple design allowed it to compute any mathematical algorithm. | |||

** Konrad Zuse created the first programmable computer, the Z3, by using a binary system that could better represent wires than the decimal system. | |||

* '''A Look Into the Future''' | |||

** The future of computers looks bright in the field of Quantum Computing. | |||

** Quantum Computing uses principle of quantum mechanics such as superposition, entanglement, and so on, allowing it to perform operations that traditional computers can't. | |||

** Throughout computer history, computer science has become entwined with concepts such as math, electrical engineering. Now, quantum mechanics could be the latest. | |||

}} | |||

== References == | == References == | ||

<references/> | |||

Latest revision as of 12:01, 7 February 2023

Introduction[edit]

Computers have undergone a long and rich history with several variations and developments throughout time. As a developer, it's important to understand this history as computational devices continue to grow and evolve. This experience provides a cursory view of how modern computers were developed and a glimpse into the future of computation.

Origins[edit]

Many analog devices were created in early human history from a need to keep track of growing numbers of people and property. The Abacus is an example of such a device.

It was used as a computational aid for the people who operated them, known as computers. A standard abacus has four rungs with nine beads on each rung. The rungs represented 1s, 10s, 100s, and 1000s. This allowed one to easily perform basic mathematical calculations and keep track of growing early civilization.

In fact, as human civilization expanded, several technological advancements were made to aid exploration and computation. For example, the Astrolabe was an early computational device used in sea navigation through basic astronomical computation. Several of these sorts of early machines were created to perform growingly more complex calculations, tracking time, position, etc.

Military applications were most common for calculations given the dozen variables needed to accurately shoot artillery. In the past, hand-operated calculating machines would be used to pre-calculate tables of values with several variables[1]. These tables would then be used for a specific application. The issue with this was the room for error with pre-computed tables and the specificity they required: a new cannon required a different set of pre-computed values.

This led to the conceptual inventions of Charles Babbage, often deemed the father of computation[2]. Specifically, he thought of two machines: The Difference Engine and The Analytical Engine.

The Difference Engine was meant to compute mathematical tables via polynomial functions[2]. It would have been a significantly improved process over the hand devices used by human computers to formulate the many look-up tables that had to be created. The polynomial basis of the Difference Engine was that whichever functions were needed by scientists and navigators could be approximated with polynomial functions.

After this, Babbage had the idea for the Analytical Engine, meant to be a general computational device that could receive input from punch cards and provide output through a printer, bells, and curve plotters[2]. Neither engine was completed during Babbage's lifetime but would provide the basis for computation in the future.

The Modern Concept[edit]

Around the turn of the 20th century, America had a significant issue. The booming population made computing census data next to impossible. It would take so long, in fact, that by the time the data was computed, it would already be outdated. German-American statistician and inventor Herman Hollerith[3] would solve this by inventing an electromechanical tabulating machine—The Punch Card Tabulator[4]—which could keep track of the massively growing population with punch cards. This invention came from several works using the punch card as the basis for input such as Babbage's Difference Engine[4].

The US Census saved millions using these machines and reduced the time to compute to years from decades in the 1890 Census. Herman Hollerith used his invention to create the Computing Tabulating Record Company (CTR), which was renamed to the International Business Machines Corporation, famously known as IBM.[5]

From here, computers evolved greatly and perhaps most notably with the famous mathematician Alan Turing. Turing is known for the concept of Turing Completeness and the Turing Machine, which was a mathematical model of an abstract machine that would operate by manipulating symbols on a strip of tape (The BrainF*ck Programming Language is very similar to the original Turing Machine). Alan Turing created the Turing Machine during his work on the Entscheidungsproblem, saying that the Turing Machine could compute any mathematical algorithm it was given[6].

This would spawn the basis of programming languages and the criterion of whether a language is Turing-Complete, essentially meaning if it could do everything a Turing Machine could.

The first full programmable computer was created by a German engineer, Konrad Zuse. The major concept of his machine, called the Z3, used a binary system instead of the decimal system, which was used since the time of the abacus. This came from the fact that electrical wires are best represented as two values: on or off, true or false, 0 or 1. Several electrical wires could be arranged and used together to create different types of logic gates and represent all sorts of control flow, memory allocation, and be truly programmable.

A Look into the Future[edit]

Computers have come a long way from the human-driven calculations and even from the Z3. Semiconductors and microchip technology allow computational speeds that could only be dreamed of in the past. However, it seems the next evolution of computers will come in the form of Quantum Computing.

This concept can get very complex very quickly but just know that Quantum Computers are using laws of quantum mechanics such as superposition and entanglement to perform computations that traditional computers cannot. This sector is rapidly emerging and certainly one that developers should watch in the years to come.

Overall, the major trends in computational history come from a need to keep track of people and property as well as being able to quickly compute progressively more involved, in-depth, and challenging computational problems. From this, it's easy to understand how the applications of computers have grown and evolved over time as well as how Computer Science is so entwined with the fields of electrical engineering, mathematics, and now quantum mechanics.

Key Concepts[edit]

- Origins

- Growing civilization required that people (computers) use computational aids such as an abacus to keep track of people and property.

- Human computers would make precomputed tables that would be released in manuals for use in the military and other fields.

- Charles Babbage thought up the concept of the Difference Engine and Analytical Engine to compute tabulate polynomial functions more effectively.

- The Modern Concept

- During the turn of the 20th century, the US Census found it nearly impossible to compute the millions of people living in the United States.

- Herman Hollerith created the Punch Card Tabulator using Babbage's concept with electrical machinery and punch cards for input. He then created the Computing Tabulating Record Company, which then became IBM.

- Alan Turing created the concept of the Turing Machine, which was an abstract machine that manipulated symbols on a strip of tape. This simple design allowed it to compute any mathematical algorithm.

- Konrad Zuse created the first programmable computer, the Z3, by using a binary system that could better represent wires than the decimal system.

- A Look Into the Future

- The future of computers looks bright in the field of Quantum Computing.

- Quantum Computing uses principle of quantum mechanics such as superposition, entanglement, and so on, allowing it to perform operations that traditional computers can't.

- Throughout computer history, computer science has become entwined with concepts such as math, electrical engineering. Now, quantum mechanics could be the latest.