W1082 Programming Language Survey

Overview[edit]

Computer Science is diverse with many distinctions, categorizations, etc. The heart of most Computer Science, however, is a Programming Language. Programming languages are a way of writing instructions to a machine using specific syntax determined by the language (syntax simply means the convention of the language, similar to the words someone says when speaking English).

Often, programming languages involve using semantics (essentially the way a language understands syntax) and logic to achieve the desired output. This means they can differ in the logic taken to achieve that desired output. Therefore, programming languages can differ in several aspects, whether that be in the language's syntax, the language's execution of source code, the language's handling of semantics, etc. The following is an overview of these key aspects and their relation to both historical and modern programming languages. It also provides a deeper discussion into all those topics.

Compiled and Interpreted[edit]

The first categorization of programming languages is the distinction between a Compiled Language and an Interpreted Language. This distinction has to do with the way a program is executed and, in a sense, translated. To better understand this concept, recognize that code is meant to be a series of commands to a machine. When these commands are completed, that is referred to as execution of the code.

Most programming languages are written using characters and numbers that humans are familiar with; this is what's called source code. However, characters that a human recognizes make little to no sense to a computer, and even if it did, trying to understand hundreds of different characters and the words they form is a very inefficient way to execute source code. This is why, before a program can be executed, it must be "translated" into machine code (also referred to as binary). It is called this because it has meaning only to a machine.

Machine code is simply a series of 0s and 1s (zeros and ones), a simple yes or a no. Perhaps the most popular way of explaining the concept of binary is through a simple light bulb. You could have a machine set so that a 0 tells a machine to keep the light bulb off, and a 1 tells the machine to turn the bulb on. Then, if you include another bulb, simply add another binary digit to handle all the outcomes of the additional bulb.

Of course, as additional features are added onto hardware, it becomes increasingly difficult to understand how a machine operates through only these 0s and 1s, which is precisely why most programming is done on the high level meaning far away from the hardware. The bottom line is that the source code you program must be translated into executable machine code, and the primary approaches of "translating" source code are Compiled and Interpreted

Compiled Languages[edit]

Compiled Languages take the approach of simply running the source code through a compiler, which results in a executable file (machine code). Essentially, the compiler translates the source code directly into machine code. As a result, Compiled Languages tend to run faster and perform more efficiently than Interpreted Languages, which have a more complex methodology behind translating source code (as is discussed later in this page). Essentially, compiling a language is akin to running a set of foreign instructions through a translator and getting the exact same instructions back in a language that you now understand.

However, this approach also means that Compiled Languages must be re-compiled after you make changes to the source code. This is because those changes must be translated again by running it through the compiler. This can lead to slower production when working with a Compiled Language.

The resulting machine code that a compiler produces is machine specific. This means that a machine code file compiled on a Windows machine will not be executable on a Linux or MacOS machine—an issue known as portability and why many consider Compiled Languages unportable. Imagine the set of foreign instructions again. It's as if the operating systems speak a different language from each other, so simply translating the foreign instructions once is inadequate to allow all the operating systems to execute the instructions.

Note that when dealing with portability, the CPU often plays an important role in whether certain software can be run. For example, many Windows applications come in both 32-bit and 64-bit. This is because many machines are still running a 32-bit processor with Windows and a 32-bit processor simply cannot run software compiled to 64-bit binary. It's as if you were tasked to turn on 64 light bulbs while only given 32. And while most systems today are 64-bit, it's an important distinction to make when working with compiled languages

A common consequence of unportable languages is that applications can be run on only one OS. This affects software ranging from video games to CAD software—essentially any environment where performance is critical and, thus, compiled languages are preferred.

However, many people also consider several Compiled Languages as being portable. The C programming language is considered highly portable. This is because the C compiler exists on nearly every machine. That is, the source code has to be shipped to the machine, but nearly every machine can compile the language.

As mentioned before, the C programming language is often considered a quintessential example of compiled languages. The language itself is famed for spawning most modern-day languages and it is used in applications where performance is critical. These include the Unix operating system, the MacOS and Windows kernel, MySQL database, and many more.

In summary, Compiled Languages are generally superior to Interpreted Languages in terms of performance. However, their primary drawbacks are that it can take longer to develop code because of the nature of re-compiling, and that resulting machine code can be platform dependent, which means portability can become an issue.

Interpreted Languages[edit]

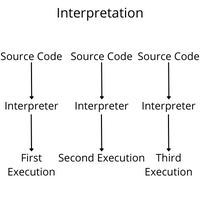

Interpreted Languages present an entirely different solution to "translating" the set of instructions (source code) into a language that the machine can understand (machine code). This is done by having another program on the machine called the interpreter. The interpreter goes through each line of source code, line by line and tells the machine how to execute the source code.

Imagine a set of instructions in a foreign language that needs to be executed. Interpreting is as if there were another person who knew the language and is reading it out, telling the executer exactly what the instructions mean. In contrast, compiling is as if there were a machine that took the instructions in a foreign language and gave the executer instructions they could understand.

Interpreted Languages tend to be less efficient in performance than compiled languages because the middle-man interpreter must always go through the source code line by line. However, having an interpreter means that changes to code are implemented quicker because no re-compiling is needed. Instead, the interpreter simply tells the machine the new instructions. This can lead to quicker development times.

When it comes to the question of portability, interpreted languages are almost always considered highly portable because they always work, as long as the required interpreter is on the machine. It doesn't matter what machine is being used as long as the program can tell the machine exactly how to execute each line. Think back to the analogy of a foreign language; it doesn't matter if all the machines speak a different language if the interpreter can still explain the language to all the machines independently.

The quintessential example of interpreted languages is today's most popular language: Python. It is used in data science, machine learning, and even web applications. Its interpreted language features provide a beginner-friendly and easy-to-read syntax, and its interpreter is built-in to most systems so it has wide portability.

In summary, Interpreted Languages are quicker for development compared to Compiled Languages. They are also widely portable. Their main drawback is their inherently slower performance.

Bytecode and JIT[edit]

Two additional terms are used when studying Compiled vs Interpreted Languages; those are bytecode and JIT also referred to as Just-In-Time Compilation. Much overlap exists between these two; however, at the base level, bytecode can be thought of as the combination of compiling and interpreting and JIT can be thought of as a feature on top of many Interpreted Languages.

Bytecode Languages operate by compiling the source code into bytecode and then letting a virtual machine interpret this bytecode so the machine can execute it. This has a few advantages: the performance is often considerably faster than purely Interpreted Languages, and it is more portable and easier to develop than purely Compiled Languages.

Easily the most well-known example of this methodology is in the Java programming language, famous for its slogan: "write once, run anywhere." Java uses the JVM (Java Virtual Machine) to interpret the bytecode that is compiled and easily executed on any machine that has the JVM installed.

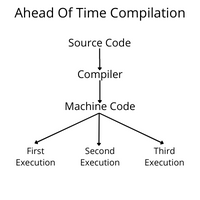

The JIT feature, often found to accelerate the performance of modern interpreted and bytecode languages, essentially finds optimizable areas in bytecode and compiles these into machine code just before the code is needed at runtime, hence the name just-in-time. The counterpart to this feature is called AOT or ahead of time compilation. (AOT is essentially "normal" compilation). The JIT feature is found in many interpreted and bytecode languages, including today's three most popular programming languages: Python, Java, and JavaScript.

Quiz[edit]

Strongly Typed and Weakly Typed[edit]

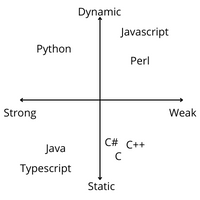

Languages can be further classified by how they handle typing. Typing is a way of classifying variables, classes, objects, etc., according to what values they are meant to hold. Typing is often thought of with two axis:

- Strongly typed vs weakly typed (also referred to as loosely)

- Static typing vs dynamic typing (also referred to as untyped). Static and Dynamic typing is covered in Static and Dynamic Semantics.

As a rule, strongly typed languages tend to be stricter in their typing rules and are unable to run if type errors are present. A common theme in strongly typed languages is explicit typing—essentially a lack of ability for types to be converted based on use case. For example, because of this, the following Java code will be unable to run.

class Main {

public static void main(String args[]) {

String number1 = "123";

int number2 = 3 + number1;

}

}

Keep in mind, the goal of strongly typed principles is to prevent errors in production with potentially extraneous uses of typing.

Weakly typed languages, on the other hand, are considered more flexible in their typing rules. A common feature in weakly typed languages is implicit type conversion. Note that there aren't many hard-and-fast restrictions as to what makes a language strongly typed or weakly typed. Indeed, the definition of these two terms doesn't have a definition on which developers have agreed. Thus, it's best to think of them more as ways of typing variables where your goal is to be strict and the other to be flexible.

Type Inference, Implicit and Explicit Typing[edit]

Another element of typing is whether languages include type inference or require explicit typing. As the name suggests, type inference is when the language assigns a type to a variable, field, etc., according to how it is being used. This is seen in many modern languages such as Swift and Typescript. You can think of type inference as a middle ground between the implicit typing, where types can be converted based on use case, and explicit typing.

For example, in the following Swift code, notice that no type is declared explicitly, yet the language knows the typing anyway.

let i = "Hello"

print(type(of: i)) //Prints String

Keep in mind that type inference can exist in both Strongly and Weakly typed languages. The question of strong and weak has to do with the strict rules for any type, as opposed to the type assignment. However, type inference comes with a few drawbacks including a lack of readability and slower performance.

Trends[edit]

Typing is a complex field of programming; however, it seems that most coders prefer a more explicit, strongly typed, typing structure for the reliability it brings, as opposed to the convenience gained from weakly typed languages. Perhaps the best case study is the emergence of Typescript over JavaScript.

JavaScript is famous for being the primary programming language behind the web. Typescript, however, is a superset of JavaScript in that it has everything JavaScript has, plus a few additional features. The primary addition is that Typescript has a strong typing system. This means that bugs are found in development, as opposed to in production, which is what can happen with JavaScript's weaker type system.

Despite this, type systems are ultimately dependent on the desired application of the language and should be considered as such when looking at a language's type system.

Quiz[edit]

Static and Dynamic Semantics[edit]

Languages are either Static or Dynamic in their semantics. Typically, this is thought to be in relation to typing, but semantics have broader implications that affect performance and even enterprise upkeep, in some cases.

As mentioned in the discussion on strong and weak typing, languages can be considered on two axes: one axis is strongly typed vs weakly typed, and the other is static typing vs dynamic typing. The discussion here is about the implications of this second axis. First, it is important to understand the meaning of semantics. Semantics refers to the branch of logic that has to do with the meaning of words, so by discussing static and dynamic semantics, one is discussing two modalities of how a machine understands the logic written in source code. Keep this in mind while reading.

Static[edit]

Static Languages are thought to be the stricter end of this axis. Essentially, in a static language, objects, variables, classes, etc., are just as they are at compile time; there aren't any changes to do at runtime. The most substantial consequence of this is that if a language with static semantics is able to compiled, it will execute all the way through (though the code is not guaranteed to work how it was intended).

Of course, the effects of static semantics is most often seen with typing because in a static language, types have further strict rules. With static languages, types are checked before being compiled, meaning values cannot be changed or used in erroneously. Because of this, many think of the term not as static typing but as Static Checking. When considering static semantics, however, don't get confused with the static keyword found in many languages (often this use of "static" is to denote a method or variable to occupy only one area within memory).

The effects of static semantics can also be seen on syntax. Observe how the types for corresponding variables in this Java code must be declared.

class Main {

public static void main(String args[]) {

String s = "Hello World ";

int i = 3000;

System.out.println(s + i);

}

}

These type declarations are used with the language's static semantics to check the code for errors before runtime. In dynamic typing (which is discussed just below), these types are often not required. Instead, they are simply variables given values. In this way, the conversation on semantics blends with the discussion of explicit and implicit typing.

The Bottom Line: Static semantics checks code at compile time. This way, types are standardized, and the source code compilation is clear.

Dynamic[edit]

Note that dynamic languages are also referred to as untyped languages.

Dynamic languages are inherently the opposite of static. Thus, while static languages do checking at compile time, dynamic languages do checking at runtime. This allows you far greater flexibility. You can write code more quickly and compile it more easily because fewer checks are in place.

This means many dynamic languages do not require types to be put onto variables. JavaScript and Python are two examples. Rather, declarations can be made and the value can be assigned to a variable. If the language has type inference as a feature, it can assign a type when it is declared. Observe the following Python code.

variable = "23" # assigns type of string

i = int(variable) #casts string to int

i+=1 # iterates int

print(i) # prints 24

In the above Python script, typing still plays a role and requires you to cast the string type to an integer before doing integer operations on it. However, its dynamic semantics coupled with type inference means you aren't required to declare types in the source code.

In fact, Python is an interesting case because it is considered both a Strongly typed language and Dynamic in its semantics. To understand why, let's compare the same lines of code: one from Python (a strongly typed, dynamic language), another from Javascript (a weakly typed, dynamic language), and Java (a strongly typed, static language).

JavaScript Example[edit]

console.log("hello runtime!") //succesfully prints

let i = "123"

i++;

console.log(i); //succesfully prints 124

Python Example[edit]

print("hello run-time!") #prints

i = "123"

i+=1 # Throws type error

print(i)

Java Example[edit]

class Main {

public static void main(String args[]) {

System.out.println("Hello run-time!"); //does not print, program fails at compile time

int i = "123";

i++;

System.out. println(i);

}

}

As you can see, JavaScript compiles and it works as intended because of its flexibility of weak typing. Python, despite its dynamic semantics, throws a type error because of its strong typing policies that prevent the implicit type conversion. The important thing to understand, however, is that Python and JavaScript both attempt the code, throwing errors only when coming upon an issue at runtime. This is the essence of dynamic semantics. Meanwhile, the static typing of Java prevents its code from being compiled whatsoever.

Broader Implications[edit]

Despite the complexities of semantics, the fundamentals tend to be the same across languages, and understanding these is integral to using the correct technologies for a project. Looking back on the advantages and disadvantages of the differing semantics, it is clear that dynamic languages provide quicker development cycles and flexibility; whereas static languages provide further safety, readability, and standardization of code.

This means that among larger organizations, static languages can often provide a more consistent code base, and dynamic languages might have the edge among smaller teams. For example, with a statically typed language, a new developer can easily look at the code and know whether it will run. With a dynamic language, a new developer will have to run the code and might find that it works only for certain user input and not for others. In this case, the flexibility of a dynamic language comes as a disadvantage.

However, in a smaller team, development cycles might be considerably faster using dynamic semantics as aspects such as casting, generics, etc., could be omitted in some cases. Here, dynamic semantics enhances efficiency by giving more flexibility to the little developer power that exists.

Therefore, to make smart decisions on technologies required for projects, the pros and cons of the individual language should be considered.

Quiz[edit]

Generics[edit]

Another feature often discussed in relation to languages is support for generics. Generic programming is a style where certain methods or classes can be defined so it can be used with any data type. The use case is that it is unknown what type will be used in a method, so it is kept generic. Java is known for its support of generics.

The primary benefit is that the same code can be reused for multiple types, allowing for adherence to the DRY principle (The DRY principle simply states "Don't Repeat Yourself," meaning that code should not be repeated several times). Meanwhile, the primary drawbacks to this style of programming is the additional level of complexity and abstraction that is added. Despite this, most static languages have support for this style of programming.

For an example of how to use a generic method, observe the following Java code.

class Main {

public static void main(String args[]) {

int i = 5;

String s = "hello world";

print(i); //successfully prints

print(s); //successfully prints

}

public static <T> void print (T t){

System.out.println(t);

}

}

In dynamic languages, however, generics are very rarely supported because a dynamic language will not attempt type checking at compile time. This means that generics would be simply redundant. Examine the following Python code, a dynamic language that does not have the need for generics.

def printvar(i):

print(i)

printvar(1) # pass integer parameter

printvar("hello") # pass strong parameter

Quiz[edit]

Language Summary Table[edit]

| Language | Compiled, Interpreted, Bytecode | JIT or AOT | Strong or Weak Typing | Implicit or Explicit Typing | Static or Dynamic Semantics | In-use or Historical | Support for Generics |

|---|---|---|---|---|---|---|---|

| Machine language | N/A | N/A | N/A | N/A | N/A | Historical | N/A |

| Assembly | N/A | N/A | N/A | N/A | Dynamic | Historical | N/A |

| Fortran | Compiled | AOT | Weakly Typed | Explicit | Static | In-use | unsupported |

| COBOL | Compiled | JIT | Strongly Typed | Explicit | Static | In-use | unsupported |

| ALGOL | Compiled | AOT | Strongly Typed | Explicit | Static | Historical | unsupported |

| Lisp | Compiled | AOT | Strongly Typed | Implicit | Dynamic | Historical | supported |

| C | Compiled | AOT | Weakly Typed | Explicit | Static | In-use | supported |

| C++ | Compiled | AOT | Weakly Typed | Implicit | Static | In-use | supported |

| C# | Compiled | JIT | Weakly Typed | Implicit | Static | In-use | supported |

| Prolog | Compiled | AOT | Weakly Typed | Implicit | Dynamic | In-use | unsupported |

| Ada | Compiled | AOT | Strongly Typed | Explicit | Static | In-use | supported |

| Pascal | Compiled | AOT | Strongly Typed | Explicit | Static | In-use | supported |

| Modula-2 | Compiled | AOT | Weakly Typed | Explicit | Static | In-use | unsupported |

| Perl | Bytecode | JIT | Weakly Typed | Implicit | Dynamic | In-use | unsupported |

| Smalltalk | Bytecode | JIT | Strongly Typed | Implicit | Static | In-use | supported |

| Java | Bytecode | JIT | Strongly Typed | Explicit | Static | In-use | supported |

| Swift | Compiled | JIT | Strongly Typed | Partially Implicit | Static | In-use | supported |

| Javascript | Interpreted | JIT | Weakly Typed | Implicit | Dynamic | In-use | unsupported |

| Typescript | Interpreted | JIT | Optional | Optional | Optional | In-use | unsupported |

| Python | Interpreted | JIT | Strongly Typed | Implicit | Dynamic | In-use | unsupported |

| Rust | Compiled | AOT | Strongly Typed | Explicit, optional Implicit | Static | In-use | supported |

| Go | Compiled | AOT | Strongly Typed | Partially Implicit | Static | In-use | unsupported |

| Kotlin | Bytecode | JIT | Strongly Typed | Partially Implicit | Static | In-use | supported |

| PHP | Interpreted | JIT | Weakly Typed | Explicit | Dynamic | In-use | unsupported |