Curriculum[edit]

| Coder Merlin™ Computer Science Curriculum Data | |

|

Unit: Computer history Experience Name: Computer History (W1021) Next Experience: () Knowledge and skills:

Topic areas: Contributors to computer science; Computer hardware Classroom time (average): 30 minutes Study time (average): 60 minutes Successful completion requires knowledge: understand the evolution of computer hardware systems through time; identify key contributors to the development of computer science Successful completion requires skills: demonstrate proficiency in explaining the progression of computer hardware systems through time; demonstrate proficiency in identifying the individuals and their contributions toward computer science |

A Brief History of Computers[edit]

An overview of how machines can emulate the power of minds and gradually evolve into the operating systems we have today. It is important that you study the timeline of computer science to better understand the progress and growth of technology in our everyday lives.

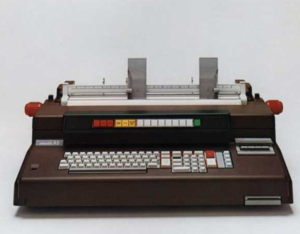

The Abacus[edit]

Before the 20th century, most calculations were manually performed by humans. Some early mechanical tools such as the abacus provided assistance with solving basic math problems. The beads of an abacus were counted to separate numbers into digits from the 1s to 1,000s places. These were called calculating machines and, at the time, were faster at crunching numbers than writing them on paper. The earliest operator was a mechanical device that a person could manipulate to get the output of an equation, and soon after, it became known as the first computer, able to discover the answer to arithmetic operations at much higher precision.

The Difference Engine[edit]

The first general-purpose computing device was thought to have been designed by Charles Babbage, an English mechanical engineer who pioneered the first difference engine around 1819. The difference engine was an automated, mechanical calculator designed to tabulate polynomial functions. Its main purpose was to compute astronomical and mathematical tables by turning a crank on the machine. In general, most mathematical functions that engineers, scientists, and navigators commonly use can be approximated by polynomials, allowing the difference engine to compute tables of useful numbers. The ability to automatically produce these tables enabled much more rapid production of error-free tables than what was previously possible through a team of humans. The drawing on the right shows a cross-section of the difference engine where gear sectors are stacked in between the columns to represent orders of numbers.

The Analytical Engine[edit]

Although Charles Babbage's difference engine was never completed in his lifetime because of limited government funding and difficulties in constructing the parts, in 1833, he realized that a more general design, the Analytical Engine, was possible to help perform all kinds of mathematical calculations. It had the four core components of a computer: the mill, store, reader, and printer. Those resembled the CPU, memory and storage, processor, and input/output devices you see today. In fact, it was planned to be steam-driven and run by one attendant to automate the construction of data tables.

The Analytical Engine would receive input from punch cards, a method being used at the time to direct mechanical looms. For output, the engine would have a printer, a curve plotter, and a bell, with the ability to punch numbers onto cards. It also included an arithmetic logic unit, control flow, and integrated memory. It was capable of conditional branching by altering its functions to align with instructions written on those cards. The store could hold up to 1,000 50-digit numbers, and the device could execute programs beyond just printing out a simple sequence.

Ada Lovelace: The First Programmer[edit]

Although computer science and hardware engineering are typically fields dominated by white men, surprisingly, the first documented computer programmer was a woman named Augusta Ada Lovelace.[1] Ada Lovelace, the daughter of renowned poet, Lord Byron, and Annabella Byron, was a woman of nobility trained in the arts, math, music, and French. In 1833, at the age of 17, Ada met Charles Babbage at a party, and they soon became lifelong friends with a shared passion for computers.

When mathematician Luigi Federico Menabrea wrote a paper about Babbage's Analytical Engine in a Swiss journal, Ada translated that from Italian into French. But in her translation, she also added her own notes. Published in 1843, her French translation and accompanying notes would be one of the most important contributions to computer science.[1] In many aspects, Ada Lovelace invented the science of computing. In what is known as Note G, Ada wrote out the first computer program, which would have the Analytical Engine compute a series of Bernoulli numbers.[1] However, she did not believe this machine could draw connections between analytical results and dismissed any thoughts of artificial intelligence.

Punch Cards[edit]

Since the early 1700s, the technology of punch cards has existed in the textile industry. Punched holes in paper tape would help automate weaving looms across many factories. This revolutionized the textile industry and allowed for complex patterns to be produced and replicated efficiently. About a hundred years later, the technique of punch cards was widely adopted and became an important part of the history of data storage. By the end of the 19th century, Herman Hollerith, a mechanical engineer, revolutionized how the U.S. and other nations took the government census, using his Tabulating Machine Company's machines, which read and listed data from paper punch cards.

In 1924, Hollerith's TMC company would transform into the International Business Machines Corporation, more commonly known as IBM today, a leading manufacturer of electronic computers and other types of hardware like the disk drive. Punch cards would remain the dominant medium for inputting and storing data alongside software programs until the 1970s when it was replaced by terminals on mini-computers. [2][3]

Differential Analyzers[edit]

Differential analyzers were analog computers that could find answers to differential equations. They are responsible for major breakthroughs in science and technology, whether it was designing antennas for radio transmission or calculating ballistic trajectories in the military. In short, they can solve practical problems by getting the area under a curve through a numeric integration step to obtain valuable results from those models. During the process, a human operator would turn the wheel to enable a gear ratio that multiplies a number by a factor of 2 or more. Because of mechanical constraints, this was both time-consuming and prone to errors. It was French physicist, Gaspard-Gustave Coriolis, who thought of a device that could solve first-order differential equations.

Early attempts had trouble simulating complex models until the 1860s, when Scottish physicist James Thomson, assembled an integrating machine to predict the height of tides as one of the earliest proof of concepts. A general-purpose device was constructed by MIT professor, Vannevar Bush, who used it to calculate differential equations surrounding an electrical power network, which involved adding a torque amplifier to the rotating shaft.[1]

Human Computers[edit]

The idea of human computers began with the search for Halley's comet. Back then, computers for collecting data weren't actual machines yet. The task of computing difficult formulas was done by groups of young women trained in advanced math. They would sit down at a table and solve equations by hand, to process tons of data for jobs in astronomy, navigation, and surveying. These women were often seen as "human computers," working long hours to support the space race, the army, and engineering firms at the time.

They had an important role in World War II, as demand for human computers was rising, and all-female teams were put in charge of calculating the firing range of artillery. More computation was needed on projects funded by aeronautic organizations like NACA and NASA. The women would gather air pressure readings, test wind tunnels, and measure the flight paths of rockets. This was before the invention of digital computers that could run programs much faster and solve complex problems that might stump a person. [1]

The Turing Machine[edit]

More than one hundred years later, in 1936, Alan Turing proposed the idea that a device (which later became known as a Turing machine) would be capable of performing any conceivable mathematical computation, assuming it could be represented as an algorithm. Motivated by a theoretical approach, he sought to capture the potential of the human mind, and construct a machine similar to a teleprinter that could implement a series of simple procedures. This made it possible to detect symbols on a long strand of tape where one Turing machine reads the tables of another to change its output path.

Praised for his research on probability, Turing attempted to make sense of the decision problem (aka Entscheidungsproblem) to identify what mathematical statements are provable in a formal system. He came to the same conclusion as Alonzo Church, by declaring that the decision problem had no foreseeable resolution in his published paper, setting the boundaries of modern computation. Turing machines are indeed countable in alphabetical order using encoded tables to print out an infinite decimal.

The Z3[edit]

Some key ideas developed in the 1930s demonstrated that there was a one-to-one correspondence between Boolean logic and certain electrical circuits (now called logic gates), which have become ubiquitous with digital computers. In other words, electronic relays and switches can realize the expressions of Boolean algebra because they are designed from diodes and transistors. Common logic gates include AND, OR, XOR, and NOT.

In May 1941, Konrad Zuse, a German civil engineer, completed the Z3, recognized as the world's first programmable computer. Powered by 2,600 relays, it used a simpler, binary system rather than the decimal system from Charles Babbage's designs. Notably, Zuse anticipated that the machine's instructions could be stored in the same space used for data, a key insight, given that code was written on punched film. After World War II, the Z3 was deemed Turing-complete despite the lack of conditional branching. Nonetheless, it was used to compute wing flutter problems and shared similarities with modern machines.

ENIAC[edit]

The Electronic Numerical Integrator and Computer, or ENIAC for short, was built in 1943 to replace the mechanical components of an electronic computer with vacuum tubes. After multiple adjustments, J. Presper Eckert and his team managed to complete it. This machine performed 5,000 additions per second and stored 20 numbers at once, but it consumed a whopping 150 kilowatts of power.

The ENIAC Six[edit]

In 1946, Kathleen McNulty Mauchly Antonelli, Jean Jennings Bartik, Frances (Betty) Snyder Holberton, Marlyn Wescoff Meltzer, Frances Bilas Spence, and Ruth Lichterman Teitelbaum became the ENIAC Six—the six women who programmed and operated the ENIAC, running critical ballistic calculations for the military in wartime. Their important roles in ENIAC's operations were lost for decades.[1] In the mid-1980s, Kathy Kleiman, a young programmer, uncovered the story of the ENIAC Six, and in 2013, worked with documentary producers to create The Computers, a 20-minute documentary telling the stories of the ENIAC Six.[2]

Colossus[edit]

The Colossus was assembled by Tommy Flowers, Harry Fensom, and Don Horwood in 1944 for functional testing and is the first programmable electronic computer to be conceived. It had been designed to crack the Lorenz cipher at the GC&CS to improve cryptanalysis efforts. Using several Colossi allowed the Allied forces to intercept military intelligence from German officials during World War II, so they could decipher the Tunny messages that were Lorenz-encrypted in a matter of hours. Though it was kept secret from the public, the Colossus brought to light the concept of a general-purpose machine. It had 12 wheels, a control panel, and a paper tape transport, combining plain-text characters with key symbols of the XOR Boolean function to generate the telegraphed code. The computers were later rebuilt to their original specifications in 2008 by Tony Sale's team.

Manchester "Baby"[edit]

The Manchester Small Scale Experimental Machine was released in 1948 by Tom Kilburn and Freddie Williams, marking the first instance of a device successfully executing a program from its stored memory. Williams had experimented with electronic storage using the phosphor of a CRT screen, rewriting the charge until it retained the information through a process called regeneration. This technique still applies to the circuit in RAM of modern times. Kilburn went onto the scene, publishing a report about the dot-dash method of operating the CRT in a theoretical computer. The next step was building the Manchester "Baby" to contain the program instructions in RAM and solve for the highest factor of a number. It was able to find the right answer in only 52 minutes. This 32-bit machine had enhanced properties including serial binary arithmetic and a processing speed of 1.2 ms per input order. [1]

Grace Hopper & The Compiler[edit]

Grace Hopper was a computer scientist and naval officer, honored for her contributions to developing multiple computer languages, in a time when women started earning doctorate degrees and participated in the workforce in much higher numbers. While working alongside Howard Aiken at IBM, she helped program the Mark I, one of the oldest electromechanical computers, by punching detailed instructions onto tape and writing a user manual for the controls.

One of her greatest achievements is the high-level language COBOL, which has been implemented into business and finance systems across the country. She amassed a team that developed a compiler called the A-0 to translate mathematical code into a binary format to enable machine readability, making it possible to write programs for more than one computer. She thought of a data processor that could read English commands and invented the Flow-Matic to overcome the limitations of math-only languages like FORTRAN.

John von Neumann's EDVAC[edit]

The creators of EDVAC are John Mauchly, J. Presper Eckert, and John von Neumann, who worked together at the Moore School of Electrical Engineering. The EDVAC succeeded the ENIAC by combining a binary system with a stored-program computer. Through ultrasonic serial memory, it could perform basic arithmetic and automatic checking to aid in military construction at the US Army’s Ballistic Research Lab. The EDVAC contained 5.6 kb of memory, holding up to 1,024 44-bit words. The components had a unit for each operation: A dispatcher gathered instructions from the control, while a computational unit transferred the result to memory. It embraces a design now recognized as Von Neumann architecture where the program storage occurs in the same location as data.

UNIVAC[edit]

The UNIVAC was a computer built for commercial use that swapped punch cards for magnetic tape to store user data. Eckert and Mauchly developed it in 1946 and were eager to make this technology open to the public. Their goal was to process millions of characters more efficiently under a contract with the US Census Bureau. Eventually, UNIVAC proved its speed against rival designs of stored program computers. Rather than have someone load the data into the machine, the magnetic tape could access that information on its own, thereby expanding the capacity of an operating system. One impressive application of the UNIVAC was predicting the outcome of the US presidential election in 1952 followed by an overwhelming win for Dwight Eisenhower.

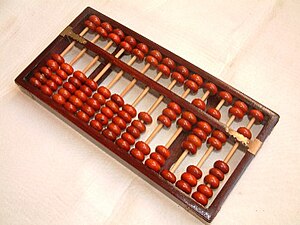

IBM[edit]

IBM practically defined the baseline of PCs and took over the market after constructing the multipurpose microcomputer in the 1980s. From there, the company continued to manufacture hardware, namely the floppy disk, ATM, magnetic stripe card, and relational databases.

Software as a product

IBM became a centralized marketplace for purchasing software as a product after making advancements in computer chips, artificial intelligence, cybersecurity, scientific research, and the Internet of Things.

RAMAC

RAMAC is a computer that relies on the 350 Disk Storage Unit to randomly access data in seconds, alter its course, and move on to a different group. It replaced punch cards with a disk drive where magnetic spots represented segments of data to be read by an arm piece.

IBM PC

The IBM personal computer was proposed by Bill Lowe in 1981. This model became known as the IBM 5150, excelling in both performance and storage capacity. Mark Dean was one African-American inventor instrumental to the invention of the IBM PC and the 1-gigahertz chip. At IBM, he researched electric circuits in operating environments and now holds more than 20 patents on PCs.

Memory[edit]

Significant strides were made toward computer memory that can be split into two different periods. Before 1946, people tried to store bits on punch cards, capacitors, and even CRTs with varying success.

Magnetic core memory

Magnetic core memory was a primary form of RAM developed by Jay Forrester, An Wang, and Ken Olsen. The core was made of magnetic donuts strung together on wires to represent zeros and ones.

Semiconductor memory

Semiconductor memory is present on electronic devices to house data, where memory cells are packed into a silicon-integrated circuit chip. Several transistors and a MOS capacitor are on each cell to ensure random access for reading and writing data.

Storage[edit]

Computers need storage devices to keep the system functional. Secondary storage refers to the external components that process the output of computing activity.

Three types of hardware storage are available on the market:

- Hard disc drives use magnetic storage to store and retrieve data from rotating platters, arranged on an actuator arm that reads or writes data to the platter surface.

- Floppy disks are covered in a magnetic, plastic case and store digital data to be read or written by a disk drive with usage restricted to legacy computers.

- Magnetic disks have a rotating magnetic surface and a mechanical arm that moves on top of it, to write and access data using a magnetization process while spinning at high speeds.

Key Concepts[edit]

- Before the 20th century, most calculations were performed by humans.

- Some early mechanical tools provided assistance; these were called calculating machines and the human operators were called computers

- The first general-purpose computing device is considered to have been designed by Charles Babbage around 1819; he called this the Difference Engine

- The Difference Engine was an automated, mechanical calculator designed to tabulate polynomial functions.

- Most mathematical functions that engineers, scientists, and navigators commonly use can be approximated by polynomials.

- The ability to automatically produce these tables enabled much more rapid production of error-free tables.

- Babbage's difference engine was never completed in his lifetime.

- Babbage realized in 1833 that a much more general design, the Analytical Engine, was indeed possible.

- The engine would receive input from punched cards.

- The engine would have a printer, curve plotter, bell, and punched cards for output.

- More than one hundred years later, in 1936, Alan Turing proposed the idea that a device would be capable of performing any conceivable mathematical computation if it could be represented as an algorithm.

- In the 1930s it was demonstrated that there was a one-to-one correspondence between Boolean logic and certain electrical circuits.

- In May, 1941, Konrad Zuse completed the Z3, recognized as the world’s first programmable computer.

- The Z3 used the binary system rather than the decimal one found in Charles Babbage's designs.

- Zuse anticipated that machine instructions could be stored in the same location used for data.

Exercises[edit]

- M1021-10 Complete Merlin Mission Manager Mission M1021-10.

Experience Metadata

| Experience ID | W1021 |

|---|---|

| Next experience ID | |

| Unit | Computer history |

| Knowledge and skills | §10.223 |

| Topic areas | Contributors to computer science Computer hardware |

| Classroom time | 30 minutes |

| Study time | 1 hour60 minutes <br /> |

| Acquired knowledge | understand the evolution of computer hardware systems through time identify key contributors to the development of computer science |

| Acquired skill | demonstrate proficiency in explaining the progression of computer hardware systems through time demonstrate proficiency in identifying the individuals and their contributions toward computer science |

| Additional categories |

- ↑ 1.0 1.1 1.2 [1] Morais, Betsy. "Ada Lovelace, the First Tech Visionary." The New Yorker. October 15, 2013. Accessed on March 1, 2022.

- ↑ [2] "Punched Cards" in Revolution: The First 2000 Years of Computing. Computer History Museum. Accessed March 3, 2022.

- ↑ [3] Scharf, Caleb. "Where Would We Be Without the Paper Punch Card?" Slate Magazine. Accessed March 3, 2022.